Nutr Res Pract.

2019 Dec;13(6):521-528. 10.4162/nrp.2019.13.6.521.

The development of food image detection and recognition model of Korean food for mobile dietary management

- Affiliations

-

- 1Department of Food and Nutrition, College of BioNano Technology, Gachon University, 1342 Seongnam-daero, Sujeong-gu, Seongnam-si, Gyeonggi 13120, Korea. skysea@gachon.ac.kr

- 2Department of Computer Engineering, College of IT, Gachon University, 1342 Seongnam-daero, Sujeong-gu, Seongnam-si, Gyeonggi 13120, Korea. yicho@gachon.ac.kr

- 3Research Group of Functional Food Materials, Korea Food Research Institute, Wanju 55365, Korea.

- KMID: 2464131

- DOI: http://doi.org/10.4162/nrp.2019.13.6.521

Abstract

- BACKGROUND/OBJECTIVES

The aim of this study was to develop Korean food image detection and recognition model for use in mobile devices for accurate estimation of dietary intake.

SUBJECTS/METHODS

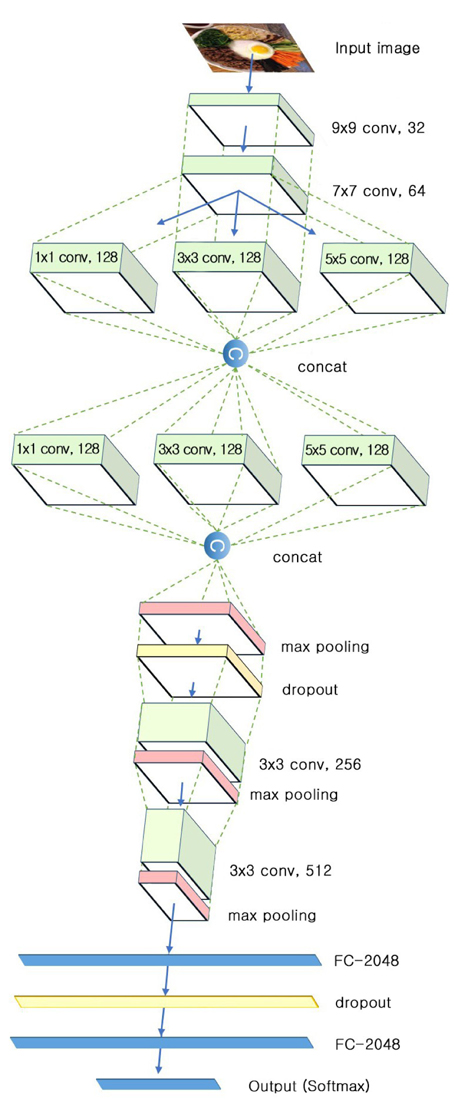

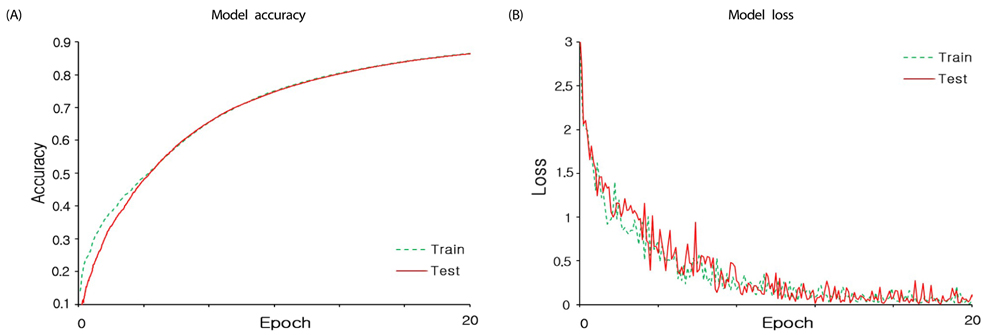

We collected food images by taking pictures or by searching web images and built an image dataset for use in training a complex recognition model for Korean food. Augmentation techniques were performed in order to increase the dataset size. The dataset for training contained more than 92,000 images categorized into 23 groups of Korean food. All images were down-sampled to a fixed resolution of 150 × 150 and then randomly divided into training and testing groups at a ratio of 3:1, resulting in 69,000 training images and 23,000 test images. We used a Deep Convolutional Neural Network (DCNN) for the complex recognition model and compared the results with those of other networks: AlexNet, GoogLeNet, Very Deep Convolutional Neural Network, VGG and ResNet, for large-scale image recognition.

RESULTS

Our complex food recognition model, K-foodNet, had higher test accuracy (91.3%) and faster recognition time (0.4 ms) than those of the other networks.

CONCLUSION

The results showed that K-foodNet achieved better performance in detecting and recognizing Korean food compared to other state-of-the-art models.

Keyword

MeSH Terms

Figure

Reference

-

1. De Keyzer W, Bracke T, McNaughton SA, Parnell W, Moshfegh AJ, Pereira RA, Lee HS, van't Veer P, De Henauw S, Huybrechts I. Cross-continental comparison of national food consumption survey methods--a narrative review. Nutrients. 2015; 7:3587–3620.

Article2. Johnson RK, Soultanakis RP, Matthews DE. Literacy and body fatness are associated with underreporting of energy intake in US low-income women using the multiple-pass 24-hour recall: a doubly labeled water study. J Am Diet Assoc. 1998; 98:1136–1140.

Article3. Stumbo PJ. New technology in dietary assessment: a review of digital methods in improving food record accuracy. Proc Nutr Soc. 2013; 72:70–76.

Article4. Martin CK, Kaya S, Gunturk BK. Quantification of food intake using food image analysis. Conf Proc IEEE Eng Med Biol Soc. 2009; 2009:6869–6872.

Article5. Lazarte CE, Encinas ME, Alegre C, Granfeldt Y. Validation of digital photographs, as a tool in 24-h recall, for the improvement of dietary assessment among rural populations in developing countries. Nutr J. 2012; 11:61.

Article6. Kikunaga S, Tin T, Ishibashi G, Wang DH, Kira S. The application of a handheld personal digital assistant with camera and mobile phone card (Wellnavi) to the general population in a dietary survey. J Nutr Sci Vitaminol (Tokyo). 2007; 53:109–116.

Article7. Wang DH, Kogashiwa M, Ohta S, Kira S. Validity and reliability of a dietary assessment method: the application of a digital camera with a mobile phone card attachment. J Nutr Sci Vitaminol (Tokyo). 2002; 48:498–504.

Article8. Khanna N, Boushey CJ, Kerr D, Okos M, Ebert DS, Delp EJ. An overview of the technology assisted dietary assessment project at Purdue University. ISM. 2010; 290–295.

Article9. Martin CK, Correa JB, Han H, Allen HR, Rood JC, Champagne CM, Gunturk BK, Bray GA. Validity of the remote food photography method (RFPM) for estimating energy and nutrient intake in near real-time. Obesity (Silver Spring). 2012; 20:891–899.

Article10. Beltran A, Dadabhoy H, Ryan C, Dholakia R, Jia W, Baranowski J, Sun M, Baranowski T. Dietary assessment with a wearable camera among children: feasibility and intercoder reliability. J Acad Nutr Diet. 2018; 118:2144–2153.

Article11. Wang DH, Kogashiwa M, Kira S. Development of a new instrument for evaluating individuals' dietary intakes. J Am Diet Assoc. 2006; 106:1588–1593.

Article12. He H, Kong F, Tan J. DietCam: multiview food recognition using a multikernel SVM. IEEE J Biomed Health Inform. 2016; 20:848–855.

Article13. Zhang XJ, Lu YF, Zhang SH. Multi-task learning for food identification and analysis with deep convolutional neural networks. J Comput Sci Technol. 2016; 31:489–500.

Article14. Mezgec S, Koroušić Seljak B. NutriNet: a deep learning food and drink image recognition system for dietary assessment. Nutrients. 2017; 9:E657.

Article15. Chang UJ, Ko SA. A study on the dietary intake survey method using a cameraphone. Korean J Community Nutr. 2007; 12:198–205.16. Son HR, Lee SM, Khil JM. Evaluation of a dietary assessment method using photography for portion size estimation. J Korean Soc Food Cult. 2017; 32:162–173.17. Jung H, Yoon J, Choi KS, Chung SJ. Feasibility of using digital pictures to examine individuals' nutrient intakes from school lunch: a pilot study. J Korean Diet Assoc. 2009; 15:278–285.18. In : Matsuda Y, Yanai K, editors. Multiple-food recognition considering co-occurrence employing manifold ranking. Proceedings of the 21st International Conference on Pattern Recognition; 2012 Nov 11–15; Tsukuba, Japan. Piscataway: IEEE;2013. 02. p. 2017–2020.19. Zhu F, Bosch M, Woo I, Kim S, Boushey CJ, Ebert DS, Delp EJ. The use of mobile devices in aiding dietary assessment and evaluation. IEEE J Sel Top Signal Process. 2010; 4:756–766.

Article20. Daugherty BL, Schap TE, Ettienne-Gittens R, Zhu FM, Bosch M, Delp EJ, Ebert DS, Kerr DA, Boushey CJ. Novel technologies for assessing dietary intake: evaluating the usability of a mobile telephone food record among adults and adolescents. J Med Internet Res. 2012; 14:e58.

Article21. Kong F, Tan J. DietCam: automatic dietary assessment with mobile camera phones. Pervasive Mob Comput. 2012; 8:147–163.

Article22. In : Kawano Y, Yanai K, editors. Real-time mobile food recognition system. 2013 IEEE Conference on Computer Vision and Pattern Recognition Workshops; 2013 Jun 23–28; Portland, USA. Piscataway: IEEE;2013. 09. p. 1–7.23. Jia W, Li Y, Qu R, Baranowski T, Burke LE, Zhang H, Bai Y, Mancino JM, Xu G, Mao ZH, Sun M. Automatic food detection in egocentric images using artificial intelligence technology. Public Health Nutr. 2019; 22:1168–1179.

Article24. In : Kagaya H, Aizawa K, Ogawa M, editors. Food detection and recognition using convolutional neural network. Proceedings of the 22nd ACM International Conference on Multimedia; 2014 Nov 3–7; Orlando, USA. New York: ACM;2014. 11. p. 1085–1088.25. In : Kingma DP, Ba J, editors. Adam: a method for stochastic optimization. Published as a Conference Paper at the 3rd International Conference for Learning Representations; 2015 May 7–9; San Diego, USA. La Jolla: ICLR;2017. 01. p. 1–15.26. Pang T, Xu K, Dong Y, Du C, Chen N, Zhu J. Rethinking softmax cross-entropy loss for adversarial robustness [Internet]. Ithaca (NY): Cornell University;2019. cited 2019 June 26. Available from: https://arxiv.org/abs/1905.10626.27. Farooq M, Doulah A, Parton J, McCrory MA, Higgins JA, Sazonov E. Validation of sensor-based food intake detection by multicamera video observation in an unconstrained environment. Nutrients. 2019; 11:E609.

Article28. In : Liu C, Cao Y, Luo Y, Chen G, Vokkarane V, Ma Y, editors. DeepFood: deep learning-based food image recognition for computer-aided dietary assessment. ICOST 2016 Proceedings of the 14th International Conference on Inclusive Smart Cities and Digital Health; 2016 May 25–27; Wuhan, China. Cham: Springer International Publishing;2016. 05. p. 37–48.29. In : Hassannejad H, Matrella G, Ciampolini P, De Munari I, Mordonini M, Cagnoni S, editors. Food image recognition using very deep convolutional networks. Proceedings of the 2nd International Workshop on Multimedia Assisted Dietary Management; 2016 Oct 16; Amsterdam, The Netherlands. New York: ACM;2016. 10. p. 41–49.30. In : Christodoulidis S, Anthimopoulos M, Mougiakakou S, editors. Food recognition for dietary assessment using deep convolutional neural networks. New Trends in Image Analysis and Processing -- ICIAP 2015 Workshops; 2015 Sep 7–8; Genova, Italy. Cham: Springer International Publishing;2015. 08. p. 458–465.31. Lu Y. Food image recognition by using convolutional neural networks (CNNs) [Internet]. Ithaca (NY): Cornell University;2019. cited 2019 May 28. Available from: https://arxiv.org/abs/1612.00983.32. Pandey P, Deepthi A, Mandal B, Puhan NB. FoodNet: recognizing food using ensemble of deep networks. IEEE Signal Process Lett. 2017; 24:1758–1762.

- Full Text Links

- Actions

-

Cited

- CITED

-

- Close

- Share

- Similar articles

-

- A Study on Dietary Habits, Dietary Behaviors and Body Image Recognition of Nutrition Knowledge after Nutrition Education for Obese Children in Seoul

- A Study on Body Image Recognition, Food Habits, Food Behaviors and Nutrient Intake according to the Obesity Index of Elementary Children in Changwon

- Development and User Satisfaction of a Mobile Phone Application for Image-based Dietary Assessment

- Effect of Two-year Course of Food and Nutrition on Improving Nutrition Knowledge, Dietary Attitudes and Food Habits of Junior College Female Students

- Correlation between adolescents’ dietary safety management competency and value recognition, efficacy, and competency of convergence using dietary area: a descriptive study