Anesth Pain Med.

2023 Jul;18(3):244-251. 10.17085/apm.23056.

Utilizing ChatGPT in clinical research related to anesthesiology: a comprehensive review of opportunities and limitations

- Affiliations

-

- 1Department of Anesthesiology and Pain Medicine, Asan Medical Center, University of Ulsan College of Medicine, Seoul, Korea

- KMID: 2547032

- DOI: http://doi.org/10.17085/apm.23056

Abstract

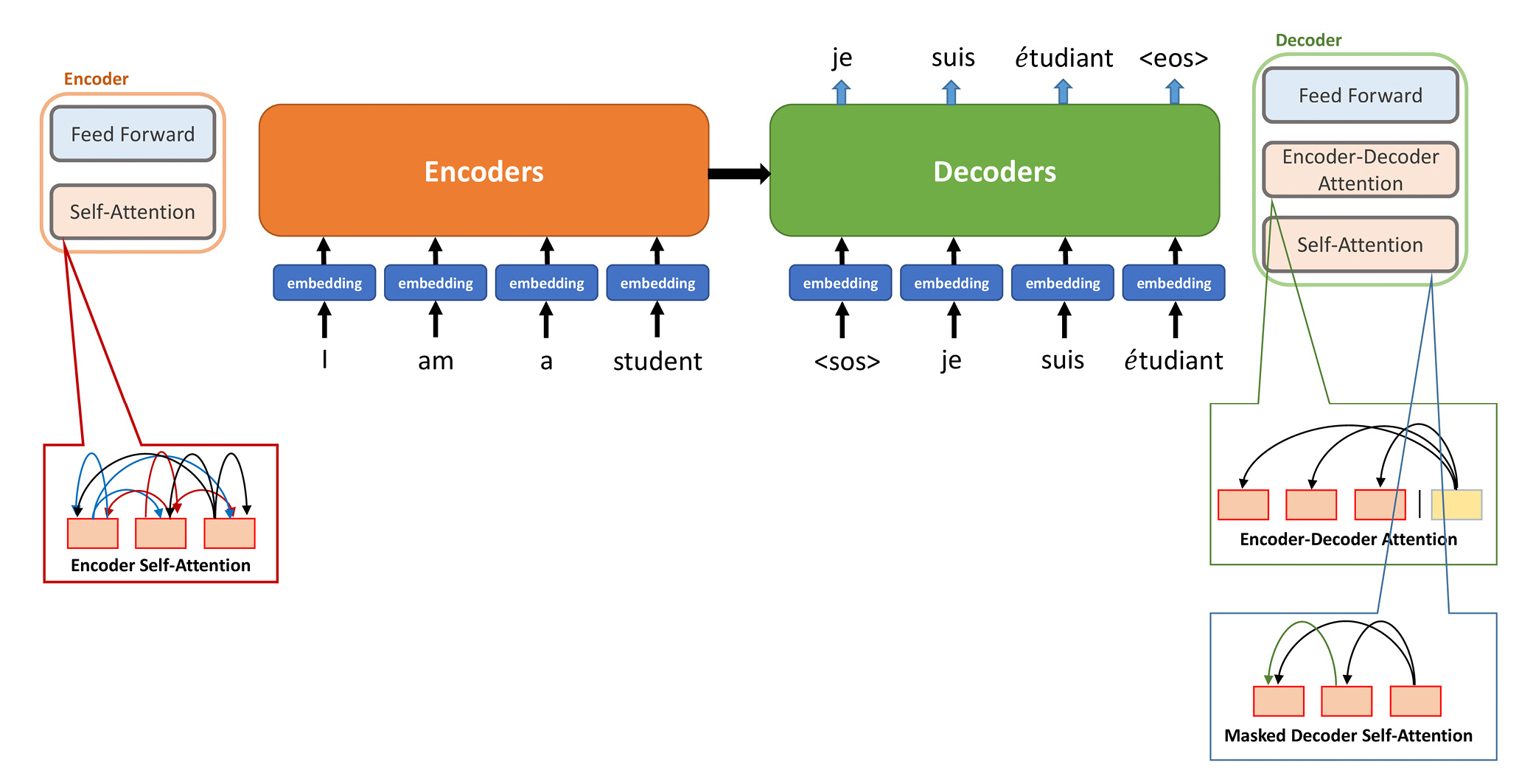

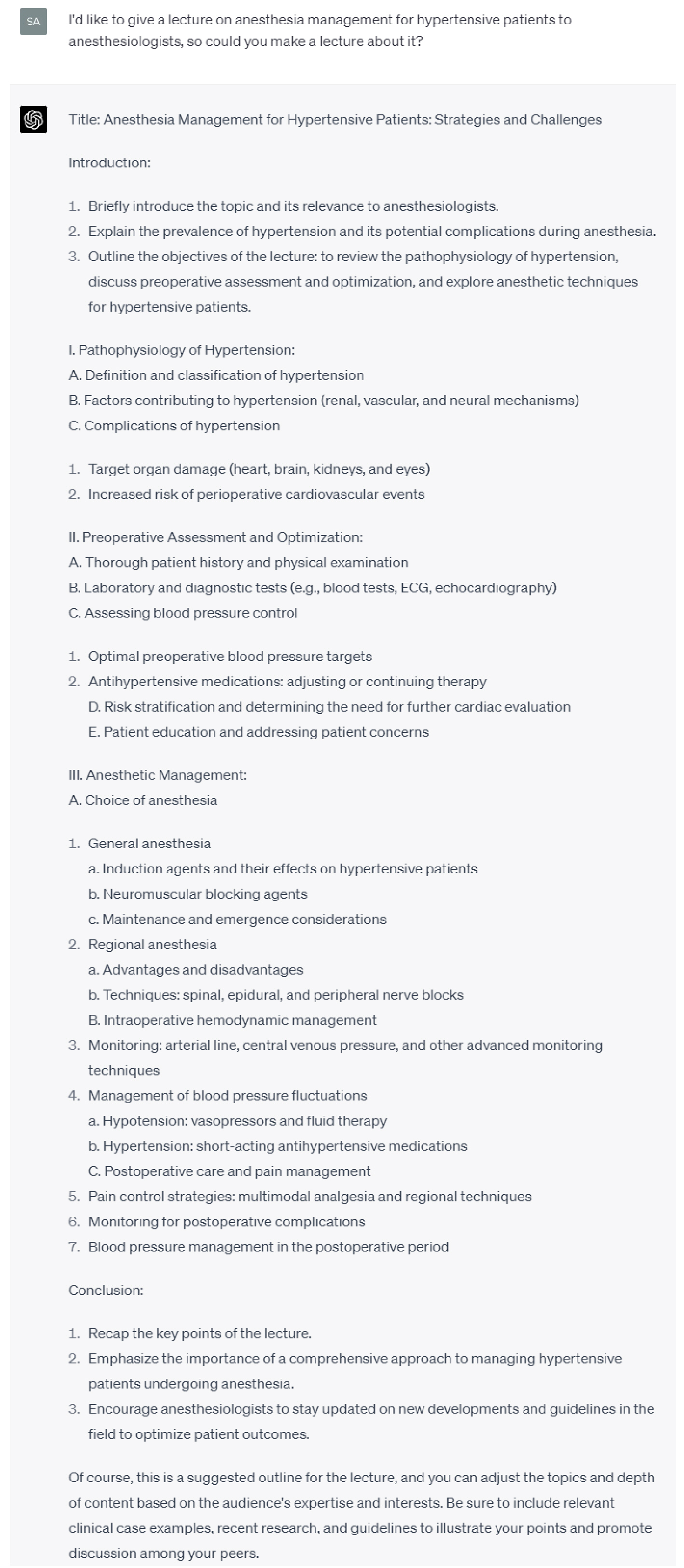

- Chat generative pre-trained transformer (ChatGPT) is a chatbot developed by OpenAI that answers questions in a human-like manner. ChatGPT is a GPT language model that understands and responds to natural language created using a transformer, which is a new artificial neural network algorithm first introduced by Google in 2017. ChatGPT can be used to identify research topics and proofread English writing and R scripts to improve work efficiency and optimize time. Attempts to actively utilize generative artificial intelligence (AI) are expected to continue in clinical settings. However, ChatGPT still has many limitations for widespread use in clinical research, owing to AI hallucination symptoms and its training data constraints. Researchers recommend avoiding scientific writing using ChatGPT in many traditional journals because of the current lack of originality guidelines and plagiarism of content generated by ChatGPT. Further regulations and discussions on these topics are expected in the future.

Keyword

Figure

Reference

-

1. Weise CMaK. Microsoft to Invest $10 Billion in OpenAI, the Creator of ChatGPT. The New York Times [Internet]. 2023 [cited 2023 Jan 23]. Available from https://www.nytimes.com/2023/01/23/business/microsoft-chatgpt-artificial-intelligence.html.2. Sheller MJ, Edwards B, Reina GA, Martin J, Pati S, Kotrotsou A, et al. Federated learning in medicine: facilitating multi-institutional collaborations without sharing patient data. Sci Rep. 2020; 10:12598.

Article3. Rumelhart DE, Hinton GE, Williams RJ. Learning representations by back-propagating errors. Nature. 1986; 323:533–6.

Article4. Sherstinsky A. Fundamentals of recurrent neural network (RNN) and long short-term memory (LSTM) network. Nature. Physica D. 2003; doi: 10.1016/j.physd.2019.132306.

Article5. Hochreiter S, Schmidhuber J. Long short-term memory. Neural Comput. 1997; 9:1735–80.

Article6. Valueva MV, Nagornov NM, Lyakhov PA, Valuev GV, Chervyakov NI. Application of the residue number system to reduce hardware costs of the convolutional neural network implementation. Math Comput Simul. 2020; 177:232–43.

Article7. Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, et al. Attention is all you need. Adv Neural Inf Process Syst 2017; 30.8. Salvagno M, Taccone FS, Gerli AG. Can artificial intelligence help for scientific writing? Crit Care. 2023; 27:75.

Article9. Hutson M. Could AI help you to write your next paper? Nature. 2022; 611:192–3.

Article10. King MR. The future of AI in medicine: a perspective from a chatbot. Ann Biomed Eng. 2023; 51:291–5.

Article11. Hammad M. The impact of artificial intelligence (AI) programs on writing scientific research. Ann Biomed Eng. 2023; 51:459–60.

Article12. Hill-Yardin EL, Hutchinson MR, Laycock R, Spencer SJ. A Chat(GPT) about the future of scientific publishing. Brain Behav Immun. 2023; 110:152–4.

Article13. Gao CA HF, Markov NS, Dyer EC, Ramesh S, Luo Y, et al. Comparing scientific abstracts generated by ChatGPT to original abstracts using an artificial intelligence output detector, plagiarism detector, and blinded human reviewers. BioRxiv. 2022; doi: 10.1101/2022.12.23.521610.

Article14. Najafali D, Camacho JM, Reiche E, Galbraith L, Morrison SD, Dorafshar AH. Truth or lies? the pitfalls and limitations of ChatGPT in systematic review creation. Aesthet Surg J. 2023; 43:NP654–NP655.

Article15. Else H. Abstracts written by ChatGPT fool scientists. Nature. 2023; 613:423.

Article16. Sharma U. OpenAI GPT-4: multimodal, new features, image input, how to use & more. Beebom. [Internet]. [2023 Apr 20]. Available from https://beebom.com/openai-gpt-4-new-features-image-input-how-to-use/.17. Patel SB, Lam K. ChatGPT: the future of discharge summaries? Lancet Digit Health. 2023; 5:e107–8.

Article18. O'Connor S. Open artificial intelligence platforms in nursing education: tools for academic progress or abuse? Nurse Educ Pract. 2023; 66:103537.19. Curtis N; ChatGPT. To ChatGPT or not to ChatGPT? The impact of artificial intelligence on academic publishing. Pediatr Infect Dis J. 2023; 42:275.

Article20. Vrana J, Singh R. This Is Chatgpt; how may I help you? Materials Evaluation. 2023; 81:17–8.21. Thorp HH. ChatGPT is fun, but not an author. Science. 2023; 379:313.

Article22. Flanagin A, Bibbins-Domingo K, Berkwits M, Christiansen SL. Nonhuman "authors" and implications for the integrity of scientific publication and medical knowledge. JAMA. 2023; 329:637–9.

Article23. Stokel-Walker C. ChatGPT listed as author on research papers: many scientists disapprove. Nature. 2023; 613:620–1.

Article

- Full Text Links

- Actions

-

Cited

- CITED

-

- Close

- Share

- Similar articles

-

- Opportunities, challenges, and future directions of large language models, including ChatGPT in medical education: a systematic scoping review

- Potential applications of ChatGPT in obstetrics and gynecology in Korea: a review article

- Medical students’ patterns of using ChatGPT as a feedback tool and perceptions of ChatGPT in a Leadership and Communication course in Korea: a cross-sectional study

- Efficacy and limitations of ChatGPT as a biostatistical problem-solving tool in medical education in Serbia: a descriptive study

- Are ChatGPT’s knowledge and interpretation ability comparable to those of medical students in Korea for taking a parasitology examination?: a descriptive study