Clin Endosc.

2020 Mar;53(2):117-126. 10.5946/ce.2020.054.

Convolutional Neural Network Technology in Endoscopic Imaging: Artificial Intelligence for Endoscopy

- Affiliations

-

- 1Department of Convergence Medicine, University of Ulsan College of Medicine, Seoul, Korea

- 2Promedius, Inc., Seoul, Korea

- 3Department of Gastroenterology, Asan Medical Center, University of Ulsan College of Medicine, Seoul, Korea

- 4Department of Radiology, Asan Medical Center, Seoul, Korea

- KMID: 2500880

- DOI: http://doi.org/10.5946/ce.2020.054

Abstract

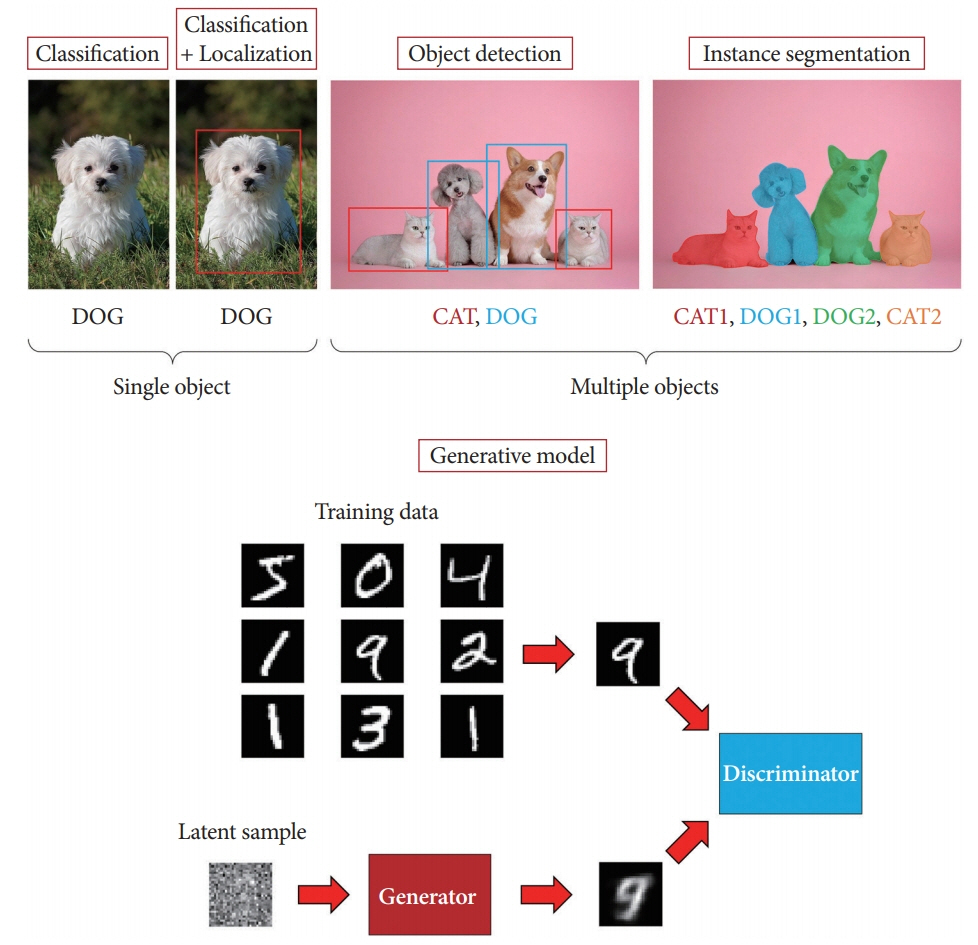

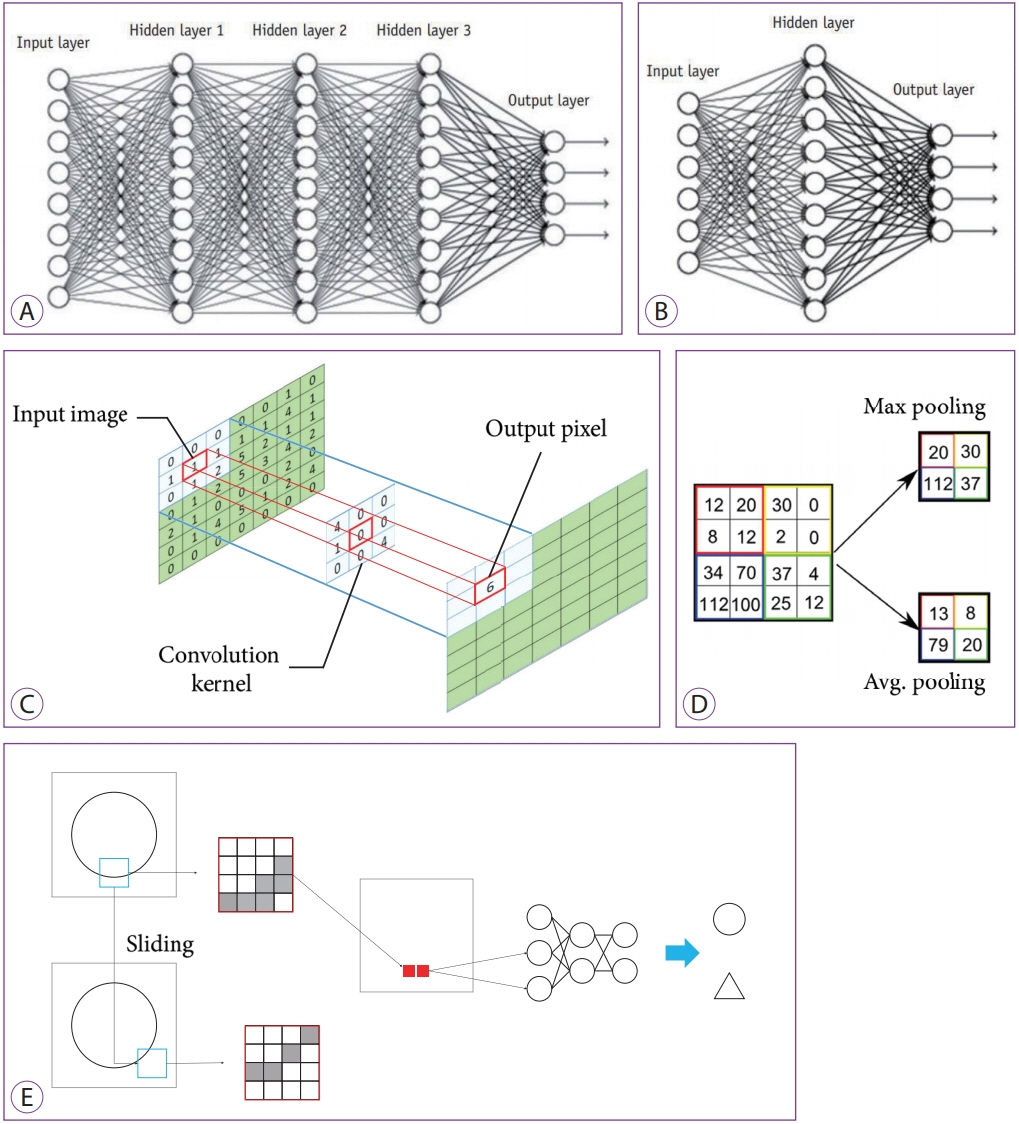

- Recently, significant improvements have been made in artificial intelligence. The artificial neural network was introduced in the 1950s. However, because of the low computing power and insufficient datasets available at that time, artificial neural networks suffered from overfitting and vanishing gradient problems for training deep networks. This concept has become more promising owing to the enhanced big data processing capability, improvement in computing power with parallel processing units, and new algorithms for deep neural networks, which are becoming increasingly successful and attracting interest in many domains, including computer vision, speech recognition, and natural language processing. Recent studies in this technology augur well for medical and healthcare applications, especially in endoscopic imaging. This paper provides perspectives on the history, development, applications, and challenges of deep-learning technology.

Keyword

Figure

Cited by 1 articles

-

A New Active Locomotion Capsule Endoscopy under Magnetic Control and Automated Reading Program

Dong Jun Oh, Kwang Seop Kim, Yun Jeong Lim

Clin Endosc. 2020;53(4):395-401. doi: 10.5946/ce.2020.127.

Reference

-

1. McCarthy J, Minsky ML, Rochester N, Shannon CE. A proposal for the dartmouth summer research project on artificial intelligence, August 31, 1955. AI Mag. 2006; 27:12–14.2. Cortes C, Vapnik V. Support-vector networks. Mach Learn. 1995; 20:273–297.

Article3. Freund Y, Schapire RE. A decision-theoretic generalization of on-line learning and an application to boosting. J Comput Syst Sci. 1997; 55:119–139.

Article4. Perronnin F, Sánchez J, Mensink T. Improving the Fisher kernel for large-scale image classification. In : In: 11th European Conference on Computer Vision; 2010 Sep 5-11; Heraklion, Greece. Berlin. Springer Berlin Heidelberg. 2010. p. 143–156.

Article5. Krizhevsky A, Sutskever I, Hinton GE. ImageNet classification with deep convolutional neural networks. Advances in Neural Information Processing Systems 25 (NIPS 2012) 2012:1097-1105.6. Wu Z, Wang X, Jiang YG, Ye H, Xue X. Modeling spatial-temporal clues in a hybrid deep learning framework for video classification. In : In: Proceedings of the 23rd ACM International Conference on Multimedia; 2015 Oct; Brisbane, Australia. New York (NY). Association for Computing Machinery. 2015. p. 461–470.

Article7. Jia Y, Shelhamer E, Donahue J, et al. Caffe: convolutional architecture for fast feature embedding. ArXiv.org 2014. Available from: https://arxiv.org/abs/1408.5093.8. Kobatake H, Yoshinaga Y. Detection of spicules on mammogram based on skeleton analysis. IEEE Trans Med Imaging. 1996; 15:235–245.

Article9. Sung K, Poggio T. Example-based learning for view-based human face detection. IEEE Trans Pattern Anal Mach Intell. 1998; 20:39–51.

Article10. Felzenszwalb PF, Girshick RB, McAllester D, Ramanan D. Object detection with discriminatively trained part-based models. IEEE Trans Pattern Anal Mach Intell. 2010; 32:1627–1645.

Article11. Dollár P, Wojek C, Schiele B, Perona P. Pedestrian detection: an evaluation of the state of the art. IEEE Trans Pattern Anal Mach Intell. 2012; 34:743–761.12. Liu W, Anguelov D, Erhan D, et al. SSD: single shot multibox detector. ArXiv.org 2016. Available from: https://arxiv.org/abs/1512.02325.13. Redmon J, Divvala S, Girshick R, Farhadi A. You only look once: unified, real-time object detection. ArXiv.org 2016. Available from: https://arxiv.org/abs/1506.02640.

Article14. Lin T-Y, Goyal P, Girshick R, He K, Dollár P. Focal loss for dense object detection. ArXiv.org 2018. Available from: https://arxiv.org/abs/1708.02002.15. Deng J, Dong W, Socher R, Li L, Kai L, Li FF. ImageNet: a large-scale hierarchical image database. In : In: 2009 IEEE Conference on Computer Vision and Pattern Recognition; 2009 Jun 20-25; Miami (FL), USA. Piscataway (NJ). IEEE. 2009. p. 248–255.

Article16. Uijlings JRR, van de Sande KEA, Gevers T, Smeulders AWM. Selective search for object recognition. Int J Comput Vis. 2013; 104:154–171.

Article17. Girshick R, Donahue J, Darrell T, Malik J. Rich feature hierarchies for accurate object detection and semantic segmentation. ArXiv.org 2014. Available from: https://arxiv.org/abs/1311.2524.

Article18. Dai J, He K, Sun J. Instance-aware semantic segmentation via multitask network cascades. ArXiv.org 2015. Available from: https://arxiv.org/abs/1512.04412.

Article19. He K, Gkioxari G, Dollár P, Girshick R. Mask R-CNN. ArXiv.org 2018. Available from: https://arxiv.org/abs/1703.06870.20. Arnab A, Torr PHS. Pixelwise instance segmentation with a dynamically instantiated network. ArXiv.org 2017. Available from: https://arxiv.org/abs/1704.02386.

Article21. Bolya D, Zhou C, Xiao F, Lee YJ. YOLACT: real-time instance segmentation. ArXiv.org 2019. Available from: https://arxiv.org/abs/1904.02689.22. Goodfellow IJ, Pouget-Abadie J, Mirza M. Generative adversarial networks. ArXiv.org 2014. Available from: https://arxiv.org/abs/1406.2661.23. Chen TCT, Liu CL, Lin HD. Advanced artificial neural networks. Algorithms. 2018; 11:102.

Article24. Chen YY, Lin YH, Kung CC, Chung MH, Yen IH. Design and implementation of cloud analytics-assisted smart power meters considering advanced artificial intelligence as edge analytics in demand-side management for smart homes. Sensors (Basel). 2019; 19:E2047.

Article25. Rosenblatt F. The perceptron, a perceiving and recognizing automaton: (Project Para). Buffalo (NY): Cornell Aeronautical Laboratory;p. 85-460-1. 1957.26. Marcus G. Hyping artificial intelligence, yet again [Internet]. New York (NY): The New Yorker;c2013. [cited 2020 Jan 29]. Available from: https://www.newyorker.com/tech/elements/hyping-artificial-intelligence-yet-again.27. Hanson SJ. A stochastic version of the delta rule. Physica D. 1990; 42:265–272.

Article28. Amari S. A theory of adaptive pattern classifiers. IEEE Trans Comput. 1967; EC-16:299–307.

Article29. Minsky ML, Papert SA. Perceptrons : an introduction to computational geometry. Cambridge (MA): Institute of Technology;1969.30. LeCun Y, Boser B, Denker JS, et al. Backpropagation applied to handwritten zip code recognition. Neural Comput. 1989; 1:541–551.

Article31. Hinton GE, Salakhutdinov RR. Reducing the dimensionality of data with neural networks. Science. 2006; 313:504–507.

Article32. He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition. ArXiv.org 2015. Available from: https://arxiv.org/abs/1512.03385.33. Silver D, Huang A, Maddison CJ, et al. Mastering the game of Go with deep neural networks and tree search. Nature. 2016; 529:484–489.

Article34. Fiesler E, Beale R. Handbook of neural computation. Bristol: Institute of Physics Pub;1997.35. Hubel DH, Wiesel TN. Receptive fields and functional architecture of monkey striate cortex. J Physiol. 1968; 195:215–243.

Article36. Katole AL, Yellapragada KP, Bedi AK, Kalra SS, Chaitanya MS. Hierarchical deep learning architecture for 10K objects classification. ArXiv.org 2015. Available from: https://arxiv.org/abs/1509.01951.

Article37. Lee JG, Jun S, Cho YW, et al. Deep learning in medical imaging: general overview. Korean J Radiol. 2017; 18:570–584.

Article38. Fenton JJ, Taplin SH, Carney PA, et al. Influence of computer-aided detection on performance of screening mammography. N Engl J Med. 2007; 356:1399–1409.

Article39. Lehman CD, Wellman RD, Buist DS, Kerlikowske K, Tosteson AN, Miglioretti DL. Diagnostic accuracy of digital screening mammography with and without computer-aided detection. JAMA Intern Med. 2015; 175:1828–1837.

Article40. Endo T, Awakawa T, Takahashi H, et al. Classification of Barrett’s epithelium by magnifying endoscopy. Gastrointest Endosc. 2002; 55:641–647.

Article41. Lee J, Oh J, Shah SK, Yuan X, Tang SJ. Automatic classification of digestive organs in wireless capsule endoscopy videos. In : In: Proceedings of the 2007 ACM symposium on Applied computing; 2007 Mar; Seoul, Korea. New York (NY). Association for Computing Machinery. 2007. p. 1041–1045.

Article42. Tanaka K, Toyoda H, Kadowaki S, et al. Surface pattern classification by enhanced-magnification endoscopy for identifying early gastric cancers. Gastrointest Endosc. 2008; 67:430–437.

Article43. Stehle T, Auer R, Gross S, et al. Classification of colon polyps in NBI endoscopy using vascularization features. In : In: SPIE Medical Imaging; 2009 Feb 7-12; Lake Buena Vista (FL), USA. 2009.

Article44. Song EM, Park B, Ha CA, et al. Endoscopic diagnosis and treatment planning for colorectal polyps using a deep-learning model. Sci Rep. 2020; 10:30.

Article45. Wimmer G, Vécsei A, Uhl A. CNN transfer learning for the automated diagnosis of celiac disease. In : In: 2016 Sixth International Conference on Image Processing Theory, Tools and Applications (IPTA); 2016 Dec 12-15; Oulu, Finland. Piscataway (NJ). IEEE. 2016. p. 1–6.

Article46. Zhang R, Zheng Y, Mak TW, et al. Automatic detection and classification of colorectal polyps by transferring low-level CNN features from nonmedical domain. IEEE J Biomed Health Inform. 2017; 21:41–47.

Article47. Yoon HJ, Kim S, Kim JH, et al. A lesion-based convolutional neural network improves endoscopic detection and depth prediction of early gastric cancer. J Clin Med. 2019; 8:E1310.

Article48. Corley DA, Levin TR, Doubeni CA. Adenoma detection rate and risk of colorectal cancer and death. N Engl J Med. 2014; 370:2541.

Article49. Ahn SB, Han DS, Bae JH, Byun TJ, Kim JP, Eun CS. The miss rate for colorectal adenoma determined by quality-adjusted, back-to-back colonoscopies. Gut Liver. 2012; 6:64–70.

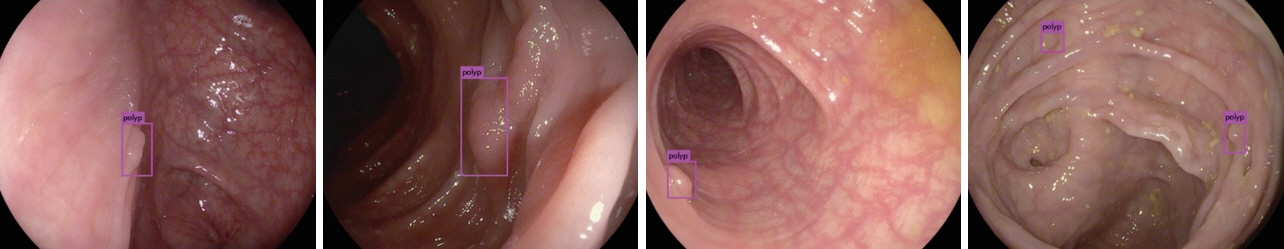

Article50. Misawa M, Kudo SE, Mori Y, et al. Artificial intelligence-assisted polyp detection for colonoscopy: initial experience. Gastroenterology. 2018; 154:2027–2029. e3.

Article51. Urban G, Tripathi P, Alkayali T, et al. Deep learning localizes and identifies polyps in real time with 96% accuracy in screening colonoscopy. Gastroenterology. 2018; 155:1069–1078. e8.

Article52. Zheng Y, Zhang R, Yu R, et al. Localisation of colorectal polyps by convolutional neural network features learnt from white light and narrow band endoscopic images of multiple databases. Conf Proc IEEE Eng Med Biol Soc. 2018; 2018:4142–4145.

Article53. Zhang X, Chen F, Yu T, et al. Real-time gastric polyp detection using convolutional neural networks. PLoS One. 2019; 14:e0214133.

Article54. Li B, Meng MQ, Xu L. A comparative study of shape features for polyp detection in wireless capsule endoscopy images. Conf Proc IEEE Eng Med Biol Soc. 2009; 2009:3731–3734.55. Liaqat A, Khan MA, Shah JH, Sharif M, Yasmin M, Fernandes SL. Automated ulcer and bleeding classification from WCE images using multiple features fusion and selection. J Mech Med Biol. 2018; 18:1850038.

Article56. Fan S, Xu L, Fan Y, Wei K, Li L. Computer-aided detection of small intestinal ulcer and erosion in wireless capsule endoscopy images. Phys Med Biol. 2018; 63:165001.

Article57. Alaskar H, Hussain A, Al-Aseem N, Liatsis P, Al-Jumeily D. Application of convolutional neural networks for automated ulcer detection in wireless capsule endoscopy images. Sensors (Basel). 2019; 19:E1265.

Article58. Wyatt CL, Ge Y, Vining DJ. Automatic segmentation of the colon for virtual colonoscopy. Comput Med Imaging Graph. 2000; 24:1–9.

Article59. Gross S, Kennel M, Stehle T, et al. Polyp segmentation in NBI colonoscopy. In : Meinzer HP, Deserno TM, Handels H, Tolxdorff T, editors. Bildverarbeitung für die medizin 2009. Berlin: Springer;Berlin, Heidelberg: 2009. p. 232–256.60. Hwang S, Celebi ME. Polyp detection in wireless capsule endoscopy videos based on image segmentation and geometric feature. In : In: 2010 IEEE International Conference on Acoustics, Speech and Signal Processing; 2010 Mar 14-19; Dallas (TX), USA. Piscataway (NJ). IEEE. 2010. p. 678–681.

Article61. Arnold M, Ghosh A, Ameling S, Lacey G. Automatic segmentation and inpainting of specular highlights for endoscopic imaging. EURASIP J Image Video Process. 2010; 2010:814319.

Article62. Ganz M, Xiaoyun Y, Slabaugh G. Automatic segmentation of polyps in colonoscopic narrow-band imaging data. IEEE Trans Biomed Eng. 2012; 59:2144–2151.

Article63. Condessa F, Bioucas-Dias J. Segmentation and detection of colorectal polyps using local polynomial approximation. In : Campilho A, Kamel M, editors. Image analysis and recognition. Berlin: Springer;Berlin, Heidelberg: 2012. p. 188–197.64. Tuba E, Tuba M, Jovanovic R. An algorithm for automated segmentation for bleeding detection in endoscopic images. In : In: 2017 International Joint Conference on Neural Networks (IJCNN); 2017 May 14-19; Anchorage (AK), USA. Piscataway (NJ). IEEE. 2017. p. 4579–4586.

Article65. Boonpogmanee I. Fully convolutional neural networks for semantic segmentation of polyp images taken during colonoscopy. Am J Gastroenterol. 2018; 113:S1532.

Article66. Brandao P, Mazomenos E, Ciuti G, et al. Fully convolutional neural networks for polyp segmentation in colonoscopy. In : In: SPIE Medical Imaging; 2017 Mar 3; Orlando (FL), USA. 2017.

Article67. Ker J, Wang L, Rao J, Lim T. Deep learning applications in medical image analysis. IEEE Access. 2018; 6:9375–9389.

Article68. Salehinejad H, Valaee S, Dowdell T, Colak E, Barfett J. Generalization of deep neural networks for chest pathology classification in X-rays using generative adversarial networks. In : In: 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP); 2018 Apr 15-20; Calgary, Canada. Piscataway (NJ). IEEE. 2018. p. 990–994.

Article69. Frid-Adar M, Diamant I, Klang E, Amitai M, Goldberger J, Greenspan H. GAN-based synthetic medical image augmentation for increased CNN performance in liver lesion classification. Neurocomputing. 2018; 321:321–331.

Article70. Shorten C, Khoshgoftaar TM. A survey on image data augmentation for deep learning. J Big Data. 2019; 6:60.

Article71. Shin Y, Qadir HA, Balasingham I. Abnormal colon polyp image synthesis using conditional adversarial networks for improved detection performance. IEEE Access. 2018; 6:56007–56017.

Article72. Kanayama T, Kurose Y, Tanaka K, et al. Gastric cancer detection from endoscopic images using synthesis by GAN. In : Shen D, Liu T, Peters TM, editors. Medical image computing and computer assisted intervention – MICCAI 2019. Cham: Springer;Cham: 2019. p. 530–538.73. Ahn J, Loc HN, Balan RK, Lee Y, Ko J. Finding small-bowel lesions: challenges in endoscopy-image-based learning systems. Computer. 2018; 51:68–76.

Article74. Mahmood F, Chen R, Durr NJ. Unsupervised reverse domain adaptation for synthetic medical images via adversarial training. IEEE Trans Med Imaging. 2018; 37:2572–2581.

Article75. Esteban-Lansaque A, Sanchez C, Borras A, Gil D. Augmentation of virtual endoscopic images with intra-operative data using content-nets. bioRxivorg. 2019; 681825.

Article76. Rau A, Edwards PJE, Ahmad OF, et al. Implicit domain adaptation with conditional generative adversarial networks for depth prediction in endoscopy. Int J Comput Assist Radiol Surg. 2019; 14:1167–1176.

Article

- Full Text Links

- Actions

-

Cited

- CITED

-

- Close

- Share

- Similar articles

-

- Overview of Deep Learning in Gastrointestinal Endoscopy

- Lesion-Based Convolutional Neural Network in Diagnosis of Early Gastric Cancer

- The Future of Capsule Endoscopy: The Role of Artificial Intelligence and Other Technical Advancements

- A Narrative Review on the Application of Artificial Intelligence on the Diagnosis and Outcome Prediction for Spinal Diseases

- Application of artificial intelligence for diagnosis of early gastric cancer based on magnifying endoscopy with narrow-band imaging