J Korean Soc Med Inform.

2009 Dec;15(4):475-481.

Variable Threshold based Feature Selection using Spatial Distribution of Data

- Affiliations

-

- 1Biomedical Informatics Technology Center, Keimyung University, Korea.

- 2Department of Medical Informatics, School of Medicine, Keimyung University, Korea. hjpark@dsmc.or.kr

- 3Department of Internal Medicine, School of Medicine, Keimyung University, Korea.

Abstract

OBJECTIVE

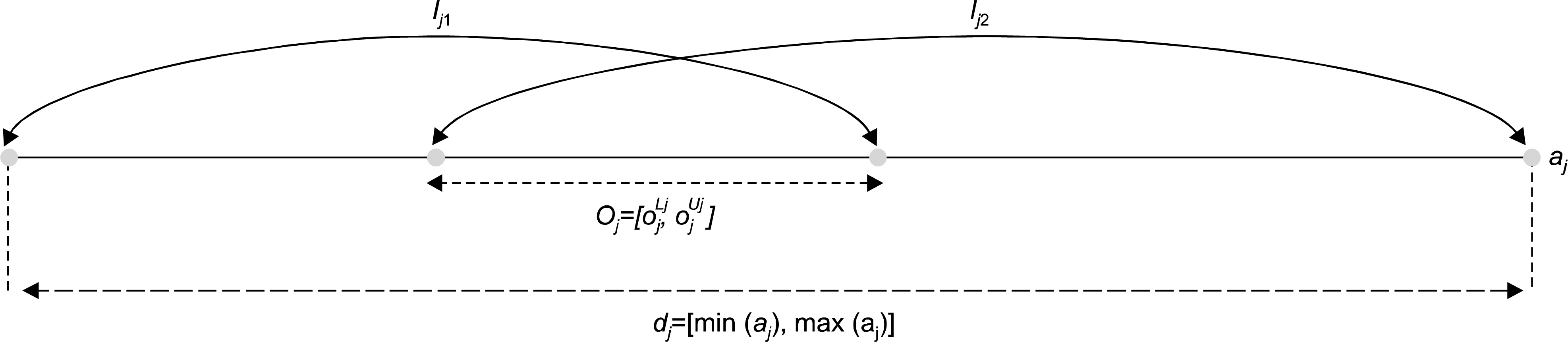

In processing high dimensional clinical data, choosing the optimal subset of features is important, not only for reduce the computational complexity but also to improve the value of the model constructed from the given data. This study proposes an efficient feature selection method with a variable threshold. METHODS: In the proposed method, the spatial distribution of labeled data, which has non-redundant attribute values in the overlapping regions, was used to evaluate the degree of intra-class separation, and the weighted average of the redundant attribute values were used to select the cut-off value of each feature. RESULTS: The effectiveness of the proposed method was demonstrated by comparing the experimental results for the dyspnea patients' dataset with 11 features selected from 55 features by clinical experts with those obtained using seven other classification methods. CONCLUSION: The proposed method can work well for clinical data mining and pattern classification applications.

MeSH Terms

Figure

Reference

-

1. Jeven P, Ewens B. Assessment of a breathless patient. Nursing. 2001; 15(16):48–55.2. Blum AL, Langley P. Selection of relevant features and examples in machine learning. Artificial Intelligence. 1997; 97(2):245–271.

Article3. Kohavi R, John GH. Wrappers for feature subset selection. Artificial Intelligence. 1997; 92(2):273–324.

Article4. Dash M, Liu H, Yao J. Dimensionality reduction of unsupervised data. ICTAI, 9th Int Conf. Tools with Artificial Intelligence (ICTAI ‘97). 1997; 532–539.

Article5. Steppe JM, Bauer KW, Rogers SK. Integrated feature and architecture selection. IEEE Trans. Neural Networks. 1996; 7(4):1007–1014.6. De RK, Pal NR, Pal SK. Feature analysis: neural network and fuzzy set theoretic approaches. Pattern Recognition. 1997; 30(10):1579–1590.

Article7. Li RP, Mukaidono M, Turksen IB. A fuzzy neural network for pattern classification and feature selection. Fuzzy Sets and Systems. 2002; 130(1):101–108.

Article8. Yang J, Honavar V. Feature subset selection using a genetic algorithm. IEEE Intelligent Systems. 1998; 13(2):44–49.

Article9. Vafaie H, Jong D. Feature space transformation using genetic algorithm. IEEE Trans. Intelligent Systems. 1998; 13(2):57–65.10. Tseng LY, Yang SB. Genetic algorithms for clustering, feature selection and classification. Proc IEEE Int Conf Neural Networks. 1997; 3:1612–1615.11. Elalami ME. A filter model for feature subset selection based on genetic algorithm. Knowledge-Based Systems. 2009; 22(5):356–362.

Article12. Quinlan JR. C4.5: programs for machine learning. San Mateo: Morgan Kaufmann;1993. p. 109–279.13. Cover TM, Hart PE. Nearest neighbor pattern classification. IEEE Trans. Information Theory. 1967; 13(1):21–27.14. Fisher RA. The use of multiple measurements in taxonomic problems. Annals of Eugenics. 1936; 7:179–188.

Article15. Friedman JH. Regularized discriminant analysis. J American Statistical Association. 1989; 84(405):165–175.

Article16. Cortes C, Vapnik V. Support-vector networks. Machine Learning. 1995; 20(3):273–297.

Article

- Full Text Links

- Actions

-

Cited

- CITED

-

- Close

- Share

- Similar articles

-

- Applying a Mutual Information Theory Based Feature Selection Method to a Classifier

- A New Direction of Cancer Classification: Positive Effect of Low-Ranking MicroRNAs

- An Application of the Clustering Threshold Gradient Descent Regularization Method for Selecting Genes in Predicting the Survival Time of Lung Carcinomas

- Ant Colony Optimization Based Feature Selection Method for QEEG Data Classification

- Spatial Distribution Analysis of Scrub Typhus in Korea