Healthc Inform Res.

2011 Jun;17(2):120-130. 10.4258/hir.2011.17.2.120.

Evaluation of Term Ranking Algorithms for Pseudo-Relevance Feedback in MEDLINE Retrieval

- Affiliations

-

- 1Medical Information Center, Seoul National University Bundang Hospital, Seongnam, Korea.

- 2Department of Biomedical Engineering, Seoul National University College of Medicine, Seoul, Korea. jinchoi@snu.ac.kr

- KMID: 1447789

- DOI: http://doi.org/10.4258/hir.2011.17.2.120

Abstract

OBJECTIVES

The purpose of this study was to investigate the effects of query expansion algorithms for MEDLINE retrieval within a pseudo-relevance feedback framework.

METHODS

A number of query expansion algorithms were tested using various term ranking formulas, focusing on query expansion based on pseudo-relevance feedback. The OHSUMED test collection, which is a subset of the MEDLINE database, was used as a test corpus. Various ranking algorithms were tested in combination with different term re-weighting algorithms.

RESULTS

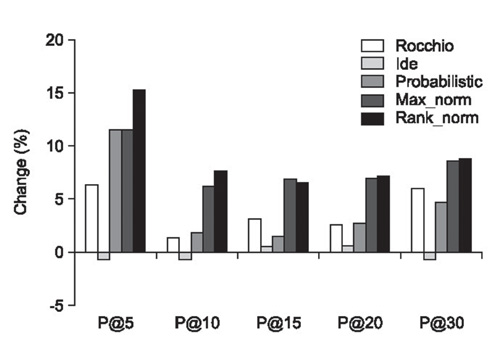

Our comprehensive evaluation showed that the local context analysis ranking algorithm, when used in combination with one of the reweighting algorithms - Rocchio, the probabilistic model, and our variants - significantly outperformed other algorithm combinations by up to 12% (paired t-test; p < 0.05). In a pseudo-relevance feedback framework, effective query expansion would be achieved by the careful consideration of term ranking and re-weighting algorithm pairs, at least in the context of the OHSUMED corpus.

CONCLUSIONS

Comparative experiments on term ranking algorithms were performed in the context of a subset of MEDLINE documents. With medical documents, local context analysis, which uses co-occurrence with all query terms, significantly outperformed various term ranking methods based on both frequency and distribution analyses. Furthermore, the results of the experiments demonstrated that the term rank-based re-weighting method contributed to a remarkable improvement in mean average precision.

Figure

Reference

-

1. Hersh WR. Information retrieval: a health and biomedical perspective. 2003. 2nd ed. New York: Springer.2. Nankivell C, Wallis P, Mynott G. Networked information and clinical decision making: the experience of Birmingham Heartlands and Solihull National Health Service Trust (Teaching). Med Educ. 2001. 35:167–172.

Article3. Haux R, Grothe W, Runkel M, Schackert HK, Windeler HJ, Winter A, Wirtz R, Herfarth C, Kunze S. Knowledge retrieval as one type of knowledge-based decision support in medicine: results of an evaluation study. Int J Biomed Comput. 1996. 41:69–85.

Article4. Bernstam EV, Herskovic JR, Aphinyanaphongs Y, Aliferis CF, Sriram MG, Hersh WR. Using citation data to improve retrieval from MEDLINE. J Am Med Inform Assoc. 2006. 13:96–105.

Article5. Vechtomova O, Wang Y. A study of the effect of term proximity on query expansion. J Inf Sci. 2006. 32:324–333.

Article6. Srinivasan P. Technical Report 1528. Exploring query expansion strategies for MEDLINE. 1995. Ithaca, NY: Cornell University.7. Srinivasan P. Retrieval feedback in MEDLINE. J Am Med Inform Assoc. 1996. 3:157–167.

Article8. Hersh W, Buckely C, Leone TJ, Hickam D. Ohsumed: an interactive retrieval evaluation and new large test collection for research. Proceedings of the 17th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval. 1994. Dublin, IE: Springer-Verlag Inc.;192–201.

Article9. Yu H, Kim T, Oh J, Ko I, Kim S, Han WS. Enabling multi-level relevance feedback on PubMed by integrating rank learning into DBMS. BMC Bioinformatics. 2010. 11:Suppl 2. S6.

Article10. States DJ, Ade AS, Wright ZC, Bookvich AV, Athey BD. MiSearch adaptive PubMed search tool. Bioinformatics. 2009. 25:974–976.

Article11. Yoo S, Choi J. On the query reformulation technique for effective MEDLINE document retrieval. J Biomed Inform. 2010. 43:686–693.

Article12. Aronson AR, Rindflesch TC, Browne AC. Exploiting a large thesaurus for information retrieval. Proceedings of Recherche d'Information Assistee par Ordinateur (RIAO). 1994. New York: ACM;197–216.13. Yang Y, Chute CG. An application of least squares fit mapping to text information retrieval. Proceedings of the 16th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval. 1993. Pittsburgh, PA: ACM;281–290.

Article14. Yang Y, Chute CG. Words or concepts: the features of indexing units and their optimal use in information retrieval. Proc Annu Symp Comput Appl Med Care. 1993. 685–689.15. Yang Y. Expert network: effective and efficient learning from human decisions in text categorization and retrieval. Proceedings of the 17th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval. 1994. Dublin, IE: Springer-Verlag Inc.;13–22.

Article16. Hersh W, Price S, Donohoe L. Assessing thesaurus-based query expansion using the UMLS metathesaurus. Proceeding of the AMIA Symposium. 2000. Los Angeles, CA: Hanley & Belfus;344–348.17. Chu WW, Liu Z, Mao W, Zou Q. A knowledge-based approach for retrieving scenario-specific medical text documents. Control Eng Pract. 2005. 13:1105–1121.

Article18. Efthimiadis EN. Query expansion. Ann Rev Inf Sci Tech. 1996. 31:121–187.19. Harman D. Relevance feedback revisited. Proceedings of the 15th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval. 1992. Copenhagen, DK: ACM;1–10.

Article20. Efthimiadis EN, Brion PV. UCLA-Okapi at TREC-2: query expansion experiments. Proceedings of 2nd Text Retrieval Conference. 1994. Gaithersburg, MD: National Institute of Standards and Technology;200–215.21. Carpineto C, de Mori R, Romano G, Bigo B. An information theoretic approach to automatic query expansion. ACM Trans Inf Syst. 2001. 19:1–27.22. Fan W, Luo M, Wang Li, Xi W, Fox EA. Tuning before feedback: combining ranking discovery and blind feedback for robust retrieval. Proceedings of the 27th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval. 2004. 2004; Sheffield, UK. New York, NY: ACM;138–145.23. Xu J, Croft WB. Improving the effectiveness of information retrieval with local context analysis. ACM Trans Inf Syst. 2000. 18:79–112.

Article24. Buckley C. Technical Report TR85-686. Implementation of the SMART information retrieval system. 1985. Ithaca, NY: Cornell University.25. Lovins JB. Development of a stemming algorithm. Mech Transl Comput Linguist. 1968. 11:22–31.26. Robertson SE, Walker S. Okapi/Keenbow at TREC-8. Proceedings of the 8th Text Retrieval Conference (TREC-8). 2000. Gaithersburg, MD: National Institute of Standards and Technology;151–162.27. Baeza-Yates R, Ribeiro-Neto B. Modern information retrieval. 1999. New York: ACM Press.28. Robertson SE. On relevance weight estimation and query expansion. J Doc. 1986. 42:182–188.

Article29. Robertson SE. On term selection for query expansion. J Doc. 1990. 46:359–364.

Article30. Rocchio JJ. Salton G, editor. Relevance feedback in information retrieval. The SMART retrieval system. 1971. Englewood Cliffs, NJ: Prentice-Hall;313–323.31. Ide E. Salton G, editor. New experiments in relevance feedback. The SMART retrieval system. 1971. Englewood Cliffs, NJ: Prentice-Hall;337–354.32. Carpineto C, Romano G, Giannini V. Improving retrieval feedback with multiple term-ranking function combination. ACM Trans Inf Syst. 2002. 20:259–290.

Article33. Hull D. Using statistical testing in the evaluation of retrieval experiments. Proceedings of the 16th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval. 1993. Pittsburgh, PA: ACM;329–338.

Article

- Full Text Links

- Actions

-

Cited

- CITED

-

- Close

- Share

- Similar articles

-

- An Evaluation of Multiple Query Representations for the Relevance Judgments used to Build a Biomedical Test Collection

- Deep learning algorithms for identifying 79 dental implant types

- Improved quality and quantity of written feedback is associated with a structured feedback proforma

- The usefulness of the objective structured clinical examination conducted to student during clinical clerkship of emergency medicine

- Common models and approaches for the clinical educator to plan effective feedback encounters