Healthc Inform Res.

2023 Jan;29(1):64-74. 10.4258/hir.2023.29.1.64.

Healthcare Professionals’ Expectations of Medical Artificial Intelligence and Strategies for its Clinical Implementation: A Qualitative Study

- Affiliations

-

- 1Department of Digital Health, Samsung Advanced Institute for Health Sciences & Technology, Sungkyunkwan University, Seoul, Korea

- 2AVOMD Inc, Seoul, Korea

- 3Department of Industrial and Information Systems Engineering, Soongsil University, Seoul, Korea

- 4Department of Emergency Medicine, Samsung Medical Center, Sungkyunkwan University School of Medicine, Seoul, Korea

- 5Digital Innovation Center, Samsung Medical Center, Seoul, Korea

- KMID: 2539399

- DOI: http://doi.org/10.4258/hir.2023.29.1.64

Abstract

Objectives

Although medical artificial intelligence (AI) systems that assist healthcare professionals in critical care settings are expected to improve healthcare, skepticism exists regarding whether their potential has been fully actualized. Therefore, we aimed to conduct a qualitative study with physicians and nurses to understand their needs, expectations, and concerns regarding medical AI; explore their expected responses to recommendations by medical AI that contradicted their judgments; and derive strategies to implement medical AI in practice successfully.

Methods

Semi-structured interviews were conducted with 15 healthcare professionals working in the emergency room and intensive care unit in a tertiary teaching hospital in Seoul. The data were interpreted using summative content analysis. In total, 26 medical AI topics were extracted from the interviews. Eight were related to treatment recommendation, seven were related to diagnosis prediction, and seven were related to process improvement.

Results

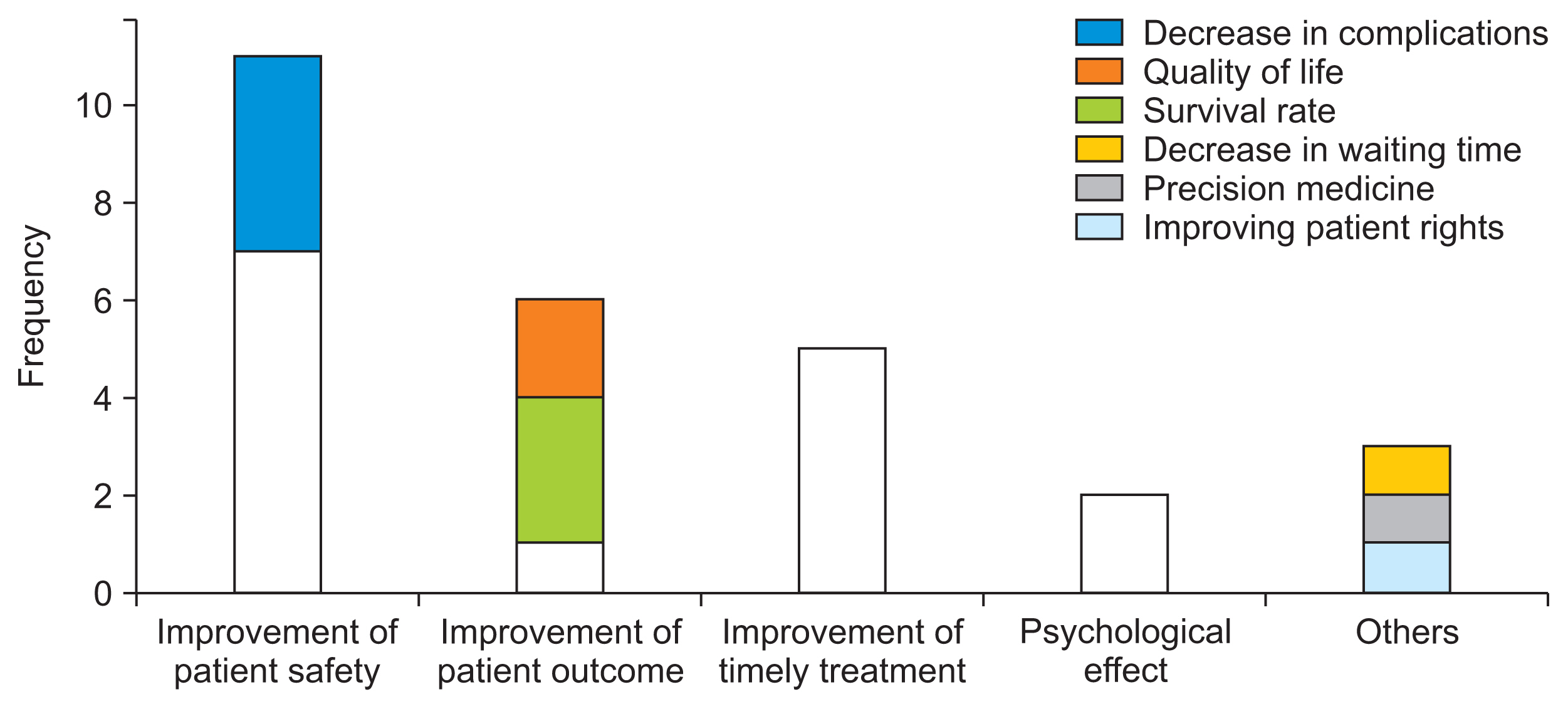

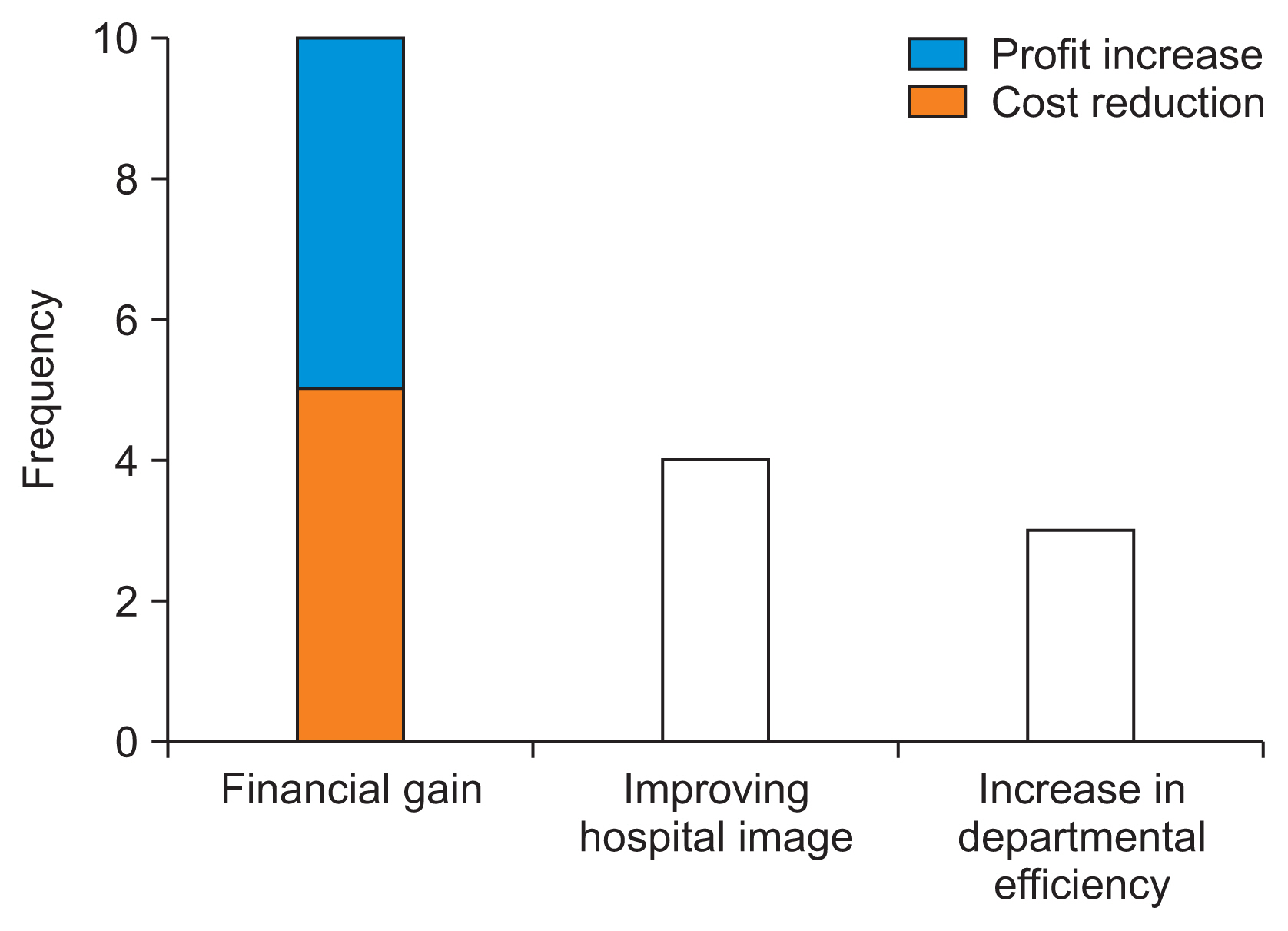

While the participants expressed expectations that medical AI could enhance their patients’ outcomes, increase work efficiency, and reduce hospital operating costs, they also mentioned concerns regarding distortions in the workflow, deskilling, alert fatigue, and unsophisticated algorithms. If medical AI decisions contradicted their judgment, most participants would consult other medical staff and thereafter reconsider their initial judgment.

Conclusions

Healthcare professionals wanted to use medical AI in practice and emphasized that artificial intelligence systems should be trustworthy from the standpoint of healthcare professionals. They also highlighted the importance of alert fatigue management and the integration of AI systems into the workflow.

Figure

Reference

-

References

1. Wuest T, Weimer D, Irgens C, Thoben KD. Machine learning in manufacturing: advantages, challenges, and applications. Prod Manuf Res. 2016; 4(1):23–45. https://doi.org/10.1080/21693277.2016.1192517 .2. Henrique BM, Sobreiro VA, Kimura H. Literature review: machine learning techniques applied to financial market prediction. Expert Syst Appl. 2019; 124:226–51. https://doi.org/10.1016/j.eswa.2019.01.012 .3. Matheny ME, Whicher D, Thadaney Israni S. Artificial intelligence in health care: a report from the National Academy of Medicine. JAMA. 2020; 323(6):509–10. https://doi.org/10.1001/jama.2019.21579 .4. Chae YM, Yoo KB, Kim ES, Chae H. The adoption of electronic medical records and decision support systems in Korea. Healthc Inform Res. 2011; 17(3):172–7. https://doi.org/10.4258/hir.2011.17.3.172 .5. Bender D, Sartipi K. HL7 FHIR: An Agile and REST-ful approach to healthcare information exchange. In : Proceedings of the 26th IEEE International Symposium on Computer-based Medical Systems; 2013 Jun 20–22; Porto, Portugal. p. 326–31. https://doi.org/10.1109/CBMS.2013.6627810 .6. Klann JG, Joss MA, Embree K, Murphy SN. Data model harmonization for the All of Us Research Program: transforming i2b2 data into the OMOP common data model. PLoS One. 2019; 14(2):e0212463. https://doi.org/10.1371/journal.pone.0212463 .7. Ahuja AS. The impact of artificial intelligence in medicine on the future role of the physician. PeerJ. 2019; 7:e7702. https://doi.org/10.7717/peerj.7702 .8. Kruse CS, Goswamy R, Raval Y, Marawi S. Challenges and opportunities of big data in health care: a systematic review. JMIR Med Inform. 2016; 4(4):e38. https://doi.org/10.2196/medinform.5359 .9. Loh E. Medicine and the rise of the robots: a qualitative review of recent advances of artificial intelligence in health. BMJ Lead. 2018; 1–5. https://doi.org/10.1136/leader-2018-000071 .10. Buch VH, Ahmed I, Maruthappu M. Artificial intelligence in medicine: current trends and future possibilities. Br J Gen Pract. 2018; 68(668):143–4. https://doi.org/10.3399/bjgp18X695213 .11. Cha WC, Cho JS, Shin SD, Lee EJ, Ro YS. The impact of prolonged boarding of successfully resuscitated out-of-hospital cardiac arrest patients on survival-to-discharge rates. Resuscitation. 2015; 90:25–9. https://doi.org/10.1016/j.resuscitation.2015.02.004 .12. Kang J, Kim J, Jo YH, Kim K, Lee JH, Kim T, et al. ED crowding and the outcomes of out-of-hospital cardiac arrest. Am J Emerg Med. 2015; 33(11):1659–64. https://doi.org/10.1016/j.ajem.2015.08.002 .13. Florkowski C, Don-Wauchope A, Gimenez N, Rodriguez-Capote K, Wils J, Zemlin A. Point-of-care testing (POCT) and evidence-based laboratory medicine (EBLM): does it leverage any advantage in clinical decision making? Crit Rev Clin Lab Sci. 2017; 54(7–8):471–94. https://doi.org/10.1080/10408363.2017.1399336 .14. Hong WS, Haimovich AD, Taylor RA. Predicting hospital admission at emergency department triage using machine learning. PLoS One. 2018; 13(7):e0201016. https://doi.org/10.1371/journal.pone.0201016 .15. Johnson AE, Ghassemi MM, Nemati S, Niehaus KE, Clifton DA, Clifford GD. Machine learning and decision support in critical care. Proc IEEE Inst Electr Electron Eng. 2016; 104(2):444–66. https://doi.org/10.1109/JPROC.2015.2501978 .16. Pinto Dos Santos D, Giese D, Brodehl S, Chon SH, Staab W, Kleinert R, et al. Medical students’ attitude towards artificial intelligence: a multicentre survey. Eur Radiol. 2019; 29(4):1640–6. https://doi.org/10.1007/s00330-018-5601-1 .17. Zheng K, Haftel HM, Hirschl RB, O’Reilly M, Hanauer DA. Quantifying the impact of health IT implementations on clinical workflow: a new methodological perspective. J Am Med Inform Assoc. 2010; 17(4):454–61. https://doi.org/10.1136/jamia.2010.004440 .18. Ash JS, Berg M, Coiera E. Some unintended consequences of information technology in health care: the nature of patient care information system-related errors. J Am Med Inform Assoc. 2004; 11(2):104–12. https://doi.org/10.1197/jamia.M1471 .19. Shanafelt TD, Dyrbye LN, West CP. Addressing physician burnout: the way forward. JAMA. 2017; 317(9):901–2. https://doi.org/10.1001/jama.2017.0076 .20. Sequeira L, Almilaji K, Strudwick G, Jankowicz D, Tajirian T. EHR “SWAT” teams: a physician engagement initiative to improve Electronic Health Record (EHR) experiences and mitigate possible causes of EHR-related burnout. JAMIA Open. 2021; 4(2):ooab018. https://doi.org/10.1093/jamiaopen/ooab018 .21. Sendelbach S, Funk M. Alarm fatigue: a patient safety concern. AACN Adv Crit Care. 2013; 24(4):378–86. https://doi.org/10.1097/NCI.0b013e3182a903f9 .22. Yoo J, Lee J, Rhee PL, Chang DK, Kang M, Choi JS, et al. Alert override patterns with a medication clinical decision support system in an academic emergency department: retrospective descriptive study. JMIR Med Inform. 2020; 8(11):e23351. https://doi.org/10.2196/23351 .23. Bengtsson M. How to plan and perform a qualitative study using content analysis. NursingPlus Open. 2016; 2:8–14. https://doi.org/10.1016/j.npls.2016.01.001 .24. Yu KH, Kohane IS. Framing the challenges of artificial intelligence in medicine. BMJ Qual Saf. 2019; 28(3):238–41. https://doi.org/10.1136/bmjqs-2018-008551 .25. Shiffman RN. Best practices for implementation of clinical decision support. Berner E, editor. Clinical decision support systems. Cham, Switzerland: Springer;2016. p. 99–109. https://doi.org/10.1007/978-3-319-31913-1_6 .26. Lee YJ, Shin SD, Lee EJ, Cho JS, Cha WC. Emergency department overcrowding and ambulance turnaround time. PLoS One. 2015; 10(6):e0130758. https://doi.org/10.1371/journal.pone.0130758 .27. Offenstadt G, Moreno R, Palomar M, Gullo A. Intensive care medicine in Europe. Crit Care Clin. 2006; 22(3):425–32. https://doi.org/10.1016/j.ccc.2006.03.007 .28. Ranji SR, Rennke S, Wachter RM. Computerised provider order entry combined with clinical decision support systems to improve medication safety: a narrative review. BMJ Qual Saf. 2014; 23(9):773–80. https://doi.org/10.1136/bmjqs-2013-002165 .29. Sittig DF, Wright A, Coiera E, Magrabi F, Ratwani R, Bates DW, et al. Current challenges in health information technology-related patient safety. Health Informatics J. 2020; 26(1):181–9. https://doi.org/10.1177/1460458218814893 .30. He J, Baxter SL, Xu J, Xu J, Zhou X, Zhang K. The practical implementation of artificial intelligence technologies in medicine. Nat Med. 2019; 25(1):30–6. https://doi.org/10.1038/s41591-018-0307-0 .

- Full Text Links

- Actions

-

Cited

- CITED

-

- Close

- Share

- Similar articles

-

- Artificial Intelligence in Medicine: Beginner's Guide

- Real World Data and Artificial Intelligence in Diabetology

- Implementation of Artificial Intelligence in Primary Care

- Application of Artificial Intelligence for the Management of Oral Diseases

- Concepts, Characteristics, and Clinical Validation of IBM Watson for Oncology