J Educ Eval Health Prof.

2018;15:7. 10.3352/jeehp.2018.15.7.

Components of the item selection algorithm in computerized adaptive testing

- Affiliations

-

- 1Graduate Management Admission Council, Reston, VA, USA. truetheta@gmail.com

- KMID: 2439263

- DOI: http://doi.org/10.3352/jeehp.2018.15.7

Abstract

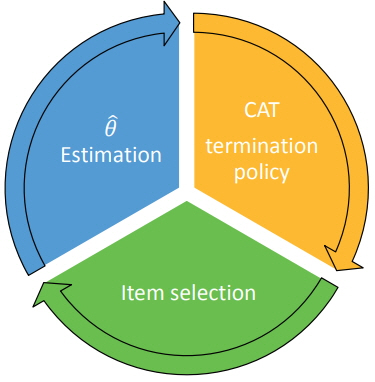

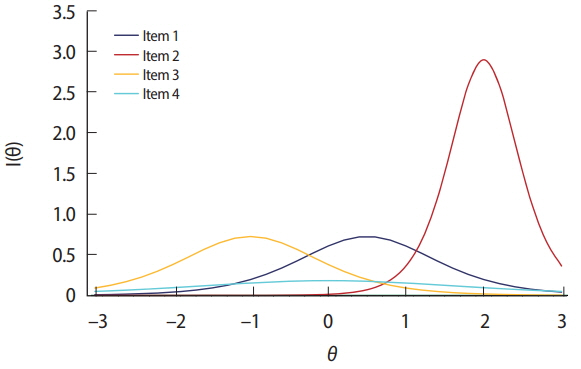

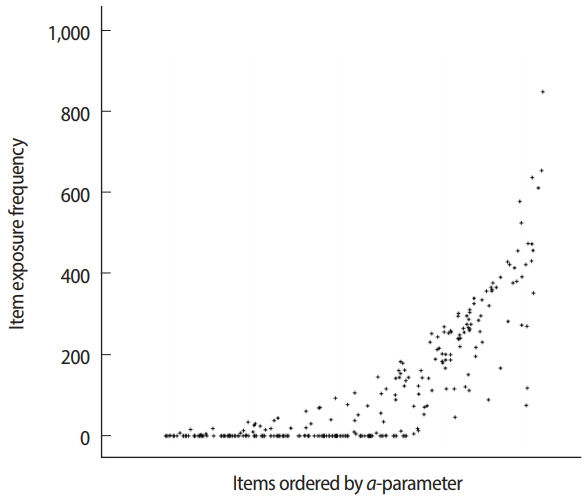

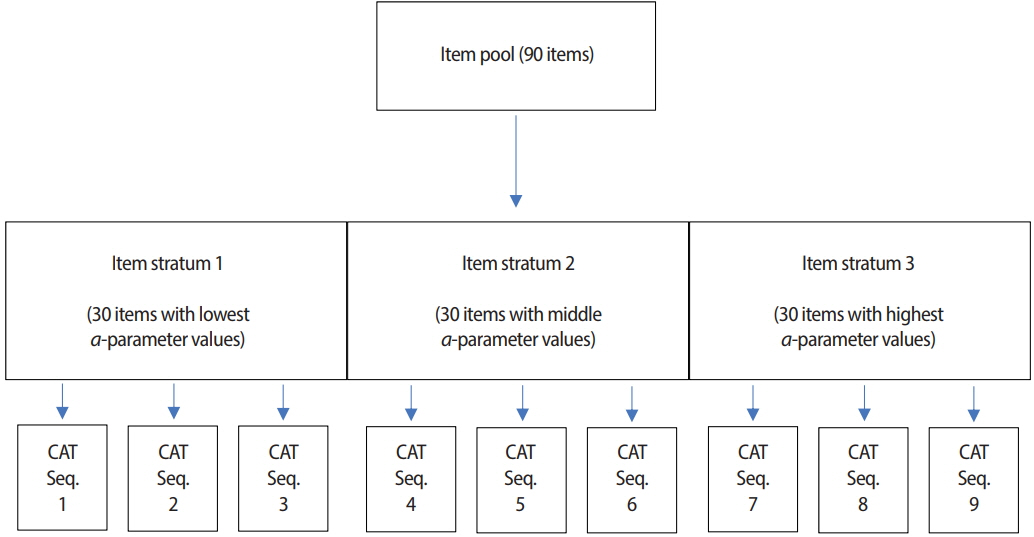

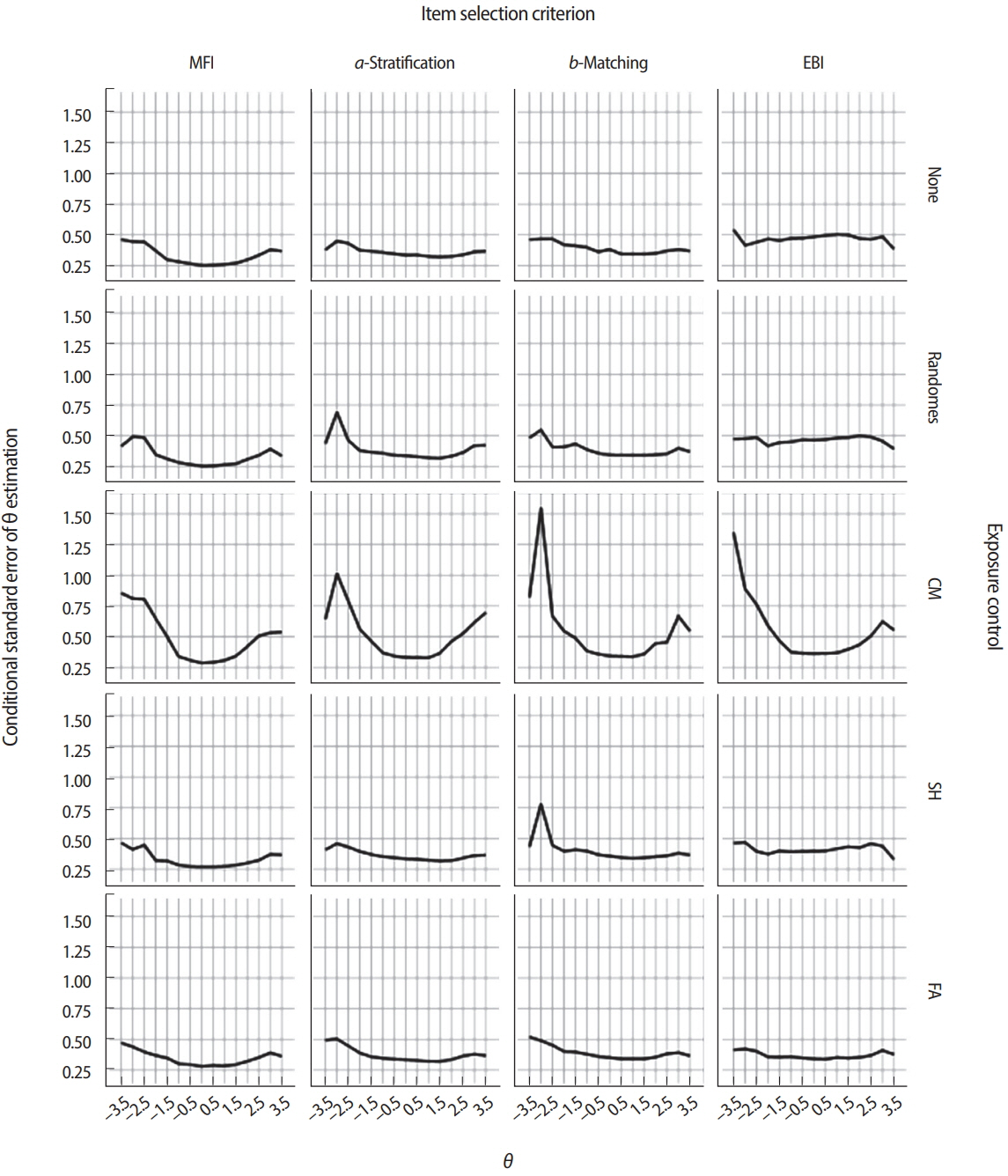

- Computerized adaptive testing (CAT) greatly improves measurement efficiency in high-stakes testing operations through the selection and administration of test items with the difficulty level that is most relevant to each individual test taker. This paper explains the 3 components of a conventional CAT item selection algorithm: test content balancing, the item selection criterion, and item exposure control. Several noteworthy methodologies underlie each component. The test script method and constrained CAT method are used for test content balancing. Item selection criteria include the maximized Fisher information criterion, the b-matching method, the a-stratification method, the weighted likelihood information criterion, the efficiency balanced information criterion, and the Kullback-Leibler information criterion. The randomesque method, the Sympson-Hetter method, the unconditional and conditional multinomial methods, and the fade-away method are used for item exposure control. Several holistic approaches to CAT use automated test assembly methods, such as the shadow test approach and the weighted deviation model. Item usage and exposure count vary depending on the item selection criterion and exposure control method. Finally, other important factors to consider when determining an appropriate CAT design are the computer resources requirement, the size of item pools, and the test length. The logic of CAT is now being adopted in the field of adaptive learning, which integrates the learning aspect and the (formative) assessment aspect of education into a continuous, individualized learning experience. Therefore, the algorithms and technologies described in this review may be able to help medical health educators and high-stakes test developers to adopt CAT more actively and efficiently.

MeSH Terms

Figure

Cited by 2 articles

-

Conducting simulation studies for computerized adaptive testing using SimulCAT: an instructional piece

Kyung (Chris) Tyek Han, Sun Huh

J Educ Eval Health Prof. 2018;15:20. doi: 10.3352/jeehp.2018.15.20.Updates from 2018: Being indexed in Embase, becoming an affiliated journal of the World Federation for Medical Education, implementing an optional open data policy, adopting principles of transparency and best practice in scholarly publishing, and appreciation to reviewers

Sun Huh, A Ra Cho

J Educ Eval Health Prof. 2018;15:36. doi: 10.3352/jeehp.2018.15.36.

Reference

-

References

1. Owen RJ. A Bayesian sequential procedure for quantal response in the context of adaptive mental testing. J Am Stat Assoc. 1975; 70:351–356. https://doi.org/10.1080/01621459.1975.10479871.

Article2. Samejima F. Estimation of latent ability using a response pattern of graded scores. Richmond (VA): Psychometric Society;1969.3. Bock RD, Aitkin M. Marginal maximum likelihood estimation of item parameters: application of an EM algorithm. Psychometrika. 1981; 46:443–459. https://doi.org/10.1007/bf02293801.

Article4. Han KT. Maximum likelihood score estimation method with fences for short-length tests and computerized adaptive tests. Appl Psychol Meas. 2016; 40:289–301. https://doi.org/10.1177/0146621616631317.

Article5. Kingsbury GG, Zara AR. Procedures for selecting items for computerized adaptive tests. Appl Meas Educ. 1989; 2:359–375. https://doi.org/10.1207/s15324818ame0204_6.

Article6. Leung CK, Chang HH, Hau KT. Content balancing in stratified computerized adaptive testing design. Proceedings of the Annual Meeting of the American Educational Research Association; 2000 Apr 24-28; New Orleans, USA. Washington (DC): American Educational Research Association;2000.7. Segall DO, Davey TC. Some new methods for content balancing adaptive tests. In : Proceedings of the 60th Annual Meeting of the Psychometric Society; 1995 Jun; Minneapolis, USA. Madison (WI). The Psychometric Society. 1995.8. Shin CD, Chien Y, Way WD, Swanson L. Weighted penalty model for content balancing in CATS [Internet]. Harlow: Pearson Education. 2009. [cited Apri]. Available from: http://images.pearsonclinical.com/images/tmrs/tmrs_rg/WeightedPenaltyModel.pdf.9. Weiss DJ. Improving measurement quality and effi ncy with adaptive testing. Appl Psychol Meas. 1982; 6:473–492. https://doi.org/10. 1177/014662168200600408.

Article10. Veerkamp WJ, Berger MP. Some new item selection criteria for adaptive testing. J Educ Behav Stat. 1997; 22:203–226. https://doi.org/10.3102/ 10769986022002203.

Article11. Chang HH, Ying Z. A-stratifi d multistage computerized adaptive testing. Appl Psychol Meas. 1999; 23:211–222. https://doi.org/10.1177/ 01466219922031338.

Article12. Chang HH, Qian J, Ying Z. a-Stratified multistage computerized adaptive testing with b blocking. Appl Psychol Meas. 2001; 25:333–341. https://doi.org/10.1177/01466210122032181.

Article13. Han KT. An effi ncy balanced information criterion for item selection in computerized adaptive testing. J Educ Meas. 2012; 49:225–246. https://doi.org/10.1111/j.1745-3984.2012.00173.x.

Article14. Chang HH, Ying Z. A global information approach to computerized adaptive testing. Appl Psychol Meas. 1996; 20:213–229. https://doi. org/10.1177/014662169602000303.

Article15. Cover TM, Thomas JA. Elements of information theory. New York (NY): Wiley;1991.16. Kullback S. Information theory. New York (NY): Wiley;1959.17. Sympson JB, Hetter RD. Controlling item-exposure rates in computerized adaptive testing. In : Proceedings of the 27th Annual Meeting of the Military Testing Association; 1985 Oct 21-25;; San Diego, USA. San Diego (CA). Navy Personnel Research and Development Centre. 1985.18. Stocking ML, Lewis C. Controlling item exposure conditional on ability in computerized adaptive testing. Princeton (NJ): Educational Testing Service;1995.19. Stocking M, Lewis C. Controlling item exposure conditional on ability in computerized adaptive testing. J Educ Behav Stat. 1998; 23:57–75. https://doi.org/10.2307/1165348.

Article20. Stocking ML, Lewis C. Methods of controlling the exposure of items in CAT. In : van der Linden WJ, Glas CA, editors. Computerized adaptive testing: theory and practice. Norwell (MA): Kluwer Academic Publishers;2000. p. 163–182.21. Han KT. SimulCAT: Windows software for simulating computerized adaptive test administration. Appl Psychol Meas. 2012; 36:64–66. https://doi.org/10.1177/0146621611414407.

Article22. Th nissen TJ. Binary programming and test design. Psychometrika. 1985; 50:411–420. https://doi.org/10.1007/bf02296260.

Article23. Th nissen TJ. Some applications of optimization algorithms in test design and adaptive testing. Appl Psychol Meas. 1986; 10:381–389. https://doi.org/10.1177/014662168601000406.

Article24. Boekkooi-Timminga E. Simultaneous test construction by zero-one programming. Methodika. 1987; 1:101–112.25. Boekkooi-Timminga E, van der Linden WJ. Algorithm for automated test construction. In : Maarse FJ, Mulder LJ, Sjouw WP, Akkerman AE, editors. Computers in psychology: methods, instrumentation and psychodiagnostics. Lisse: Swets & Zeitlinger;1988. p. 165–170.26. Van der Linden WJ, Boekkooi-Timminga E. A maximin model for IRT-based test design with practical constraints. Psychometrika. 1989; 54:237–248. https://doi.org/10.1007/bf02294518.

Article27. Van Der Linden WJ. A comparison of item-selection methods for adaptive tests with content constraints. J Educ Meas. 2005; 42:283–302. https://doi.org/10.1111/j.1745-3984.2005.00015.x.

Article28. Van Der Linden WJ, Reese LM. A model for optimal constrained adaptive testing. Appl Psychol Meas. 1998; 22:259–270. https://doi. org/10.1177/01466216980223006.

Article29. Stocking ML, Swanson L. A method for severely constrained item selection in adaptive testing. Appl Psychol Meas. 1993; 17:277–292. https://doi.org/10.1177/014662169301700308.

Article30. Swanson L, Stocking ML. A model and heuristic for solving very large item selection problems. Appl Psychol Meas. 1993; 17:151–166. https://doi.org/10.1177/014662169301700205.

Article

- Full Text Links

- Actions

-

Cited

- CITED

-

- Close

- Share

- Similar articles

-

- Overview and current management of computerized adaptive testing in licensing/certification examinations

- Introduction to the LIVECAT web-based computerized adaptive testing platform

- Conducting simulation studies for computerized adaptive testing using SimulCAT: an instructional piece

- Where Can I Find the Free Item Analysis Program Based on Item Response Theory, Computer-Based Testing and Computerized Adaptive Testing?

- Estimation of an Examinee's Ability in the Web-Based Computerized Adaptive Testing Program IRT-CAT